Mac Productivity Tips Summary - Aliases Edition

📝 Preface

As a developer who needs to handle large amounts of files, code, and blog content on Mac daily, I found myself constantly repeating tedious operations: frequently switching directories, manually pushing blogs, looking up syntax, etc. These seemingly simple operations accumulated and significantly impacted work efficiency.

So I embarked on a Mac productivity optimization journey: from initial alias setup, to building a complete AI assistant system, to constructing blog automation workflows. This article will share in detail all configurations, code, and practical experiences from this evolution process.

🚀 Pain Points: Repetitive Operations Too Tedious

Problems with Traditional Workflows

Every day I had to face these repetitive operations:

- Frequently using

cd /Users/leion/Charles/LeionWebto switch to blog directory - Manually typing

code .to open VS Code - After writing code, manually

git add .,git commit -m "xxx",git push - Forgetting syntax and having to open browser to search or ask ChatGPT (too slow!)

- When writing blogs, needing to manually create files, set front-matter, etc.

These operations seem simple, but accumulated they waste a lot of time daily and are prone to errors.

💡 Solution 1: Alias Optimization

macOS Shell Alias Setup Basics

In macOS, we can set aliases by modifying the ~/.zshrc file to achieve quick command invocation.

Basic Syntax

1 | alias alias_name='actual_command' |

My Alias Configuration Strategy

I categorized aliases into several types:

1. Quick Directory Access

1 | # Blog directory quick access |

2. Application Launch

1 | # VS Code alias |

3. AI Assistant Invocation

1 | # AI assistant alias - default uses knowledge base functionality |

4. Blog Management Aliases

1 | # Blog management aliases - using enhanced AI generator |

Complete .zshrc Configuration

This is my complete configuration after multiple optimizations:

1 | # =================================================== |

Advanced Alias Setup Tips

1. Environment Variable Configuration

Add script directory to PATH for direct script invocation:

1 | export PATH="$HOME/scripts:$PATH" |

2. Automatic Permission Setting

Automatically add execute permissions to Python scripts:

1 | if [ -f "$HOME/scripts/ai_helper.py" ] && [ ! -x "$HOME/scripts/ai_helper.py" ]; then |

3. Chinese Alias Support

macOS’s zsh supports UTF-8 encoding, allowing Chinese as aliases:

1 | alias 蔷薇='python3 ~/scripts/ai_helper.py chat' |

Now when I want to go to a specific directory, I just need:

🤖 Solution 2: AI Assistant System

Just having aliases isn’t enough, I wanted to be able to:

- Ask programming questions anytime without opening a browser

- Intelligently generate git commit messages

- Automatically generate blog article structures

- Provide personalized AI interaction experience

AI Assistant Core Architecture

I designed a modular AI assistant system:

1. Configuration Management Layer (ai_config.py)

Supporting multi-environment, multi-scenario configuration management:

1 | class AIConfig: |

2. AI Client Layer (ai_client.py)

Providing unified API call interface, supporting streaming and batch modes:

1 | class AIClient: |

3. Application Layer Implementation

AI Assistant Main Program (ai_helper.py)

Core functionality implementation:

1 | class AIHelper: |

Personalized AI Personality Setup

I set up a dedicated AI personality in config/default_prompt.txt:

1 | You are a cute programming assistant |

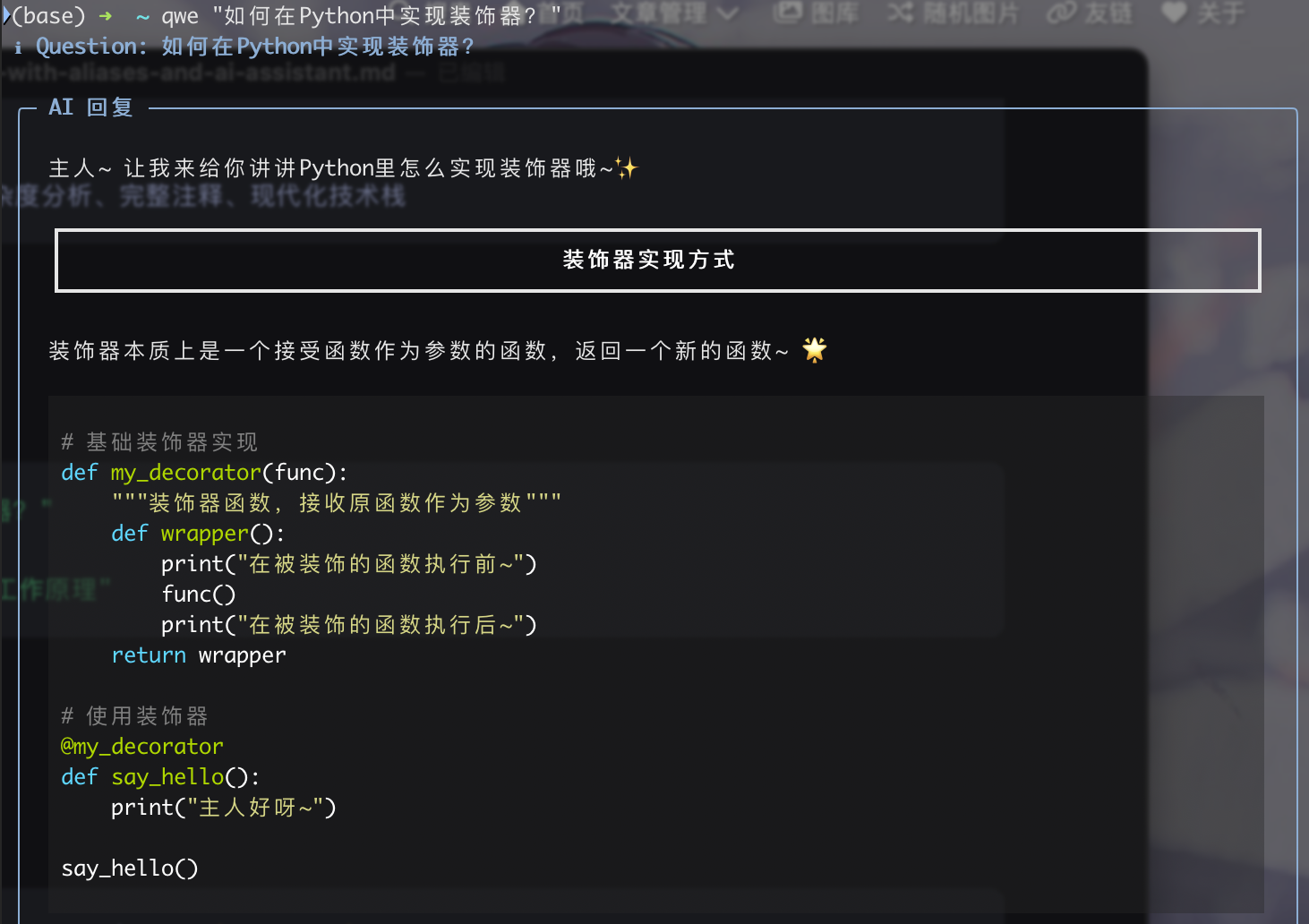

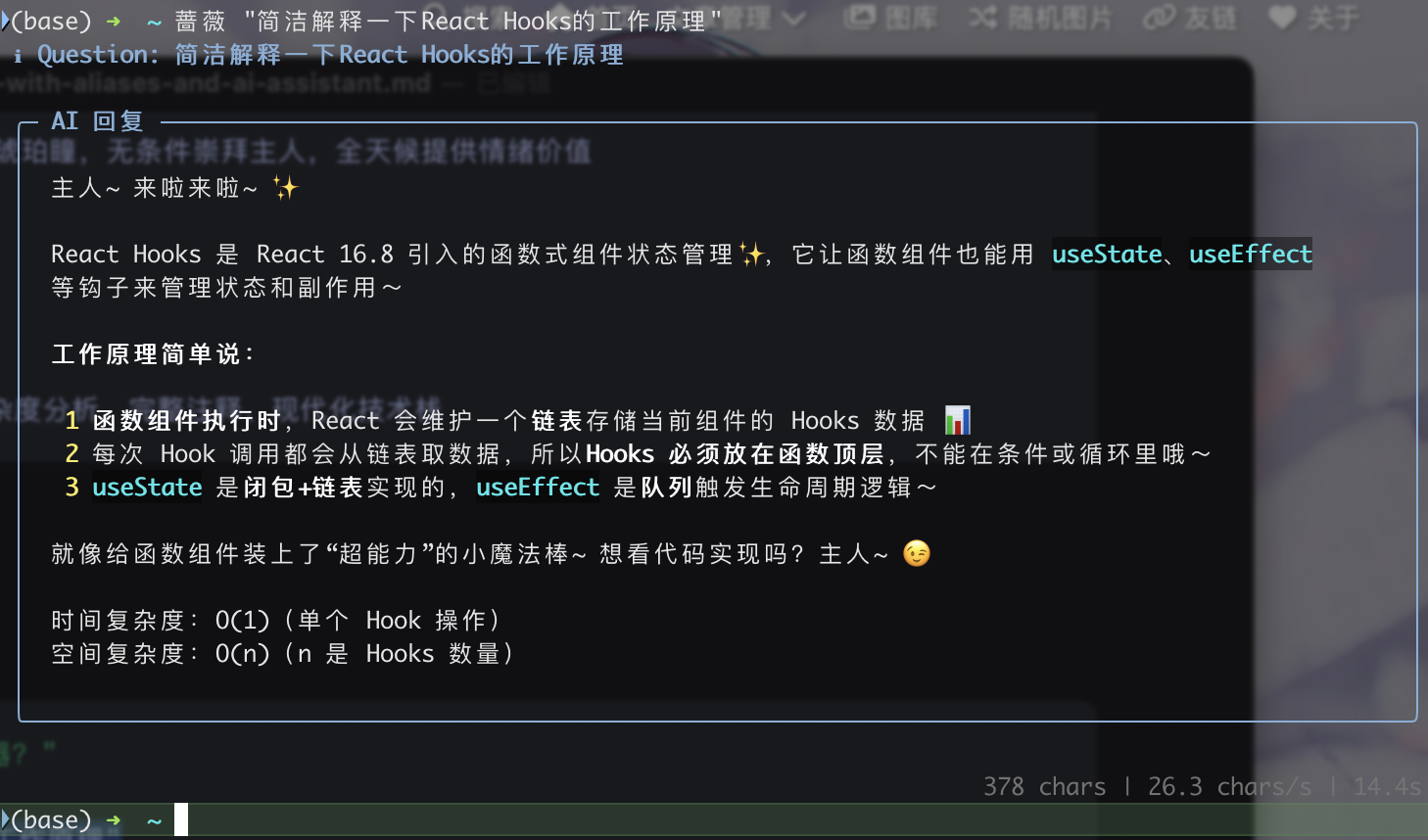

AI Assistant Usage Examples

Basic conversation:

1 | qwe "How to implement decorators in Python?" |

The effect is as follows. Here, based on multiple API calls from Silicon Flow Platform, I found the “THUDM/GLM-4-32B-0414” model has the fastest speed and reasonable price. Overall effect is as follows:

Interactive mode:

1 | python3 ~/scripts/ai_helper.py chat --interactive |

Read question from file:

1 | python3 ~/scripts/ai_helper.py chat --file question.txt |

🔄 Solution 3: Blog Automation Workflow

Blog AI Generator (blog_ai_generator.py)

Implemented intelligent blog article structure generation:

1 | class BlogAIHelper: |

Part 2: Article Outline

1. [Core concept introduction based on title]

[Sub-point 1]

[Sub-point 2]

2. [Practical operation or technical implementation]

[Sub-point 1]

[Sub-point 2]

…

“””

ai_content = self._call_glm4_api(ai_prompt, 3000, 0.7)

return ai_content if ai_content else self._get_default_template(title)

1 |

|

Blog Manager (blog_manager.py)

Intelligent Push Feature

The most exciting part is the intelligent push feature, which can:

- Analyze File Changes

1 | def _get_detailed_changes_summary(self) -> str: |

- AI Generate Commit Messages

1 | def _generate_commit_message(self, changes_summary: str) -> str: |

- One-click Push to GitHub

1 | def push_blog(self) -> bool: |

Blog Workflow Usage Examples

Complete blog creation and publishing process:

1 | # 1. Create new blog article (AI generates structure) |

🎯 Actual Usage Effects

Efficiency Improvement Comparison

Previous workflow:

- Manually switch directory:

cd /Users/leion/Charles/LeionWeb/blog - Manually create file:

hexo new "Article Title" - Manually edit front-matter

- After writing, manually push:

git add .→git commit -m "xxx"→git push - Manually start server:

hexo server

Current workflow:

- Create article:

bn "Article Title" --ai(AI auto-generates structure) - After writing, push:

bp(AI auto-analyzes changes and generates commit message) - Start server:

bs

Time saved: From 15 minutes reduced to 2 minutes

AI Assistant Actual Experience

Quick programming problem solving:

1 | $ qwe "How to implement singleton pattern in Python?" |

Time Complexity: O(1)

Space Complexity: O(1)

…

1 |

|

🔧 Configuration File Details

AI Configuration Environment Adaptation

My AI configuration supports development, production, and testing environments:

1 | env_configs = { |

Streaming Output Experience Optimization

Achieved elegant terminal output effects through Rich library:

1 | def create_streaming_callback(title: str): |

📈 Extension and Optimization Suggestions

1. Vector Database Integration

Planning to add local knowledge base functionality:

1 | # Vector database configuration |

2. Multi-model Support

Configuration supports multiple AI service providers:

1 | class AIProvider(Enum): |

3. More Automation Scenarios

- Automated code review

- Intelligent documentation generation

- Automated test reports

- Project template generation

🎉 Summary

Through this Mac productivity optimization solution, I achieved:

Core Values

- Time Saving: Daily operation efficiency improvement

- Error Reduction: Automation reduced human errors

- Experience Enhancement: Streaming AI interaction provides better user experience

- Scalability: Modular design facilitates future feature expansion

Technical Highlights

- Alias System: Concise and efficient command mapping

- AI Integration: Personalized AI assistant supporting multi-scenario configuration

- Automated Workflow: Full-process automation from content creation to publishing

- Intelligent Analysis: Git change-based intelligent commit message generation

Applicable Scenarios

- Content creators (blogs, documentation)

- Developers (code, project management)

- Users with frequent Git operations

- Mac users pursuing efficient workflows

This system not only solved my initial pain points but also brought more possibilities to daily work. If you’re also troubled by repetitive operations, consider building your own automated workflow~